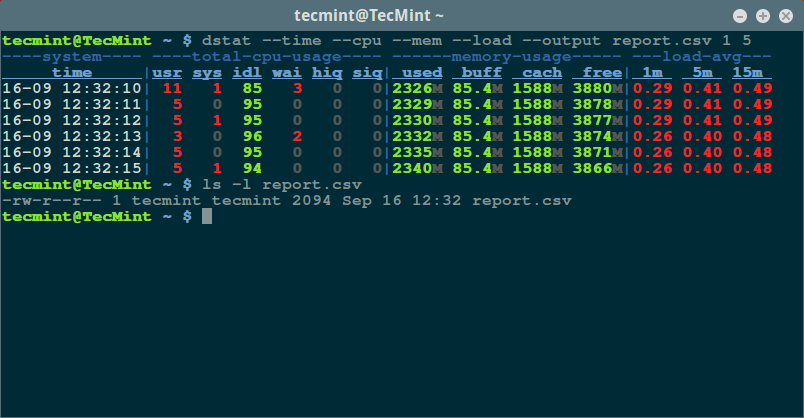

Terminal output $ bash monitor_python_script.The memory hierarchy is a way of organizing memory based on its speed and capacity. Log_process $PID_PYTHON # log needed resources to file I'm a bit late here but I'll share my command line trick using just the default ps WATCHED_PID=$( & # show output on terminal most useful 3 groups (CPU, memory and disk) contain: %usr, %system, %guest, %CPU, minflt/s, majflt/s, VSZ, RSS, %MEM, kB_rd/s, kB_wr/s, kB_ccwr/s. It's useful in case when you need extra metrics from the process(es), e.g.

Pidstat (part of sysstat package) can produce output that can be easily parsed. Grafana part is the same, except metrics names. Minimal telegraf configuration looks like: Instead of Python script sending the metrics to Statsd, telegraf (and procstat input plugin) can be used to send the metrics to Graphite directly. Then opening Grafana at authentication as admin:admin, setting up datasource you can plot a chart like: $ python procmon.py -s localhost -f chromium -r 'chromium.*'

#Linux monitor cpu and memory usage install

Then in another terminal, after starting target process: $ sudo apt-get install python-statsd python-psutil # or via pip A handy all-in-one raintank/graphite-stack (from Grafana's authors) image and psutil and statsd client. It may seem an overkill for a simple one-off test, but for something like a several-day debugging it's, for sure, reasonable. pip install memory_profilerīy default this pops up a Tkinter-based ( python-tk may be needed) chart explorer which can be exported: It can also record process with its children processes (see mprof -help). The package provides RSS-only sampling (plus some Python-specific options). Psrecord $(pgrep proc-name2) -interval 1 -duration 60 -plot plot2.png & Psrecord $(pgrep proc-name1) -interval 1 -duration 60 -plot plot1.png &

Sudo apt-get install python-matplotlib python-tk # for plotting or via pipįor single process it's the following (stopped by Ctrl+C): psrecord $(pgrep proc-name1) -interval 1 -plot plot1.pngįor several processes the following script is helpful to synchronise the charts: #!/bin/bash pip install psrecord # local user install Python psrecord package does exactly this.

The following addresses history graph of some sort. Procpath plot -d ff.sqlite -q rss -p 123 -f rss.svgĬharts look like this (they are actually interactive Pygal SVGs): procpath record -i 1 -r 120 -d ff.sqlite '$.children[?("firefox" in RSS and CPU usage of a single process (or several) out of all recorded would look like: procpath plot -d ff.sqlite -q cpu -p 123 -f cpu.svg This records all processes with "firefox" in their cmdline (query by a PID would look like = 42)]') 120 times one time per second. It's a pure-Python CLI package including its couple of dependencies (no heavy Matplotlib), can potentially plot many metrics from procfs, JSONPath queries to the process tree, has basic decimation/aggregation (Ramer-Douglas-Peucker and moving average), filtering by time ranges and PIDs, and a couple of other things. Returning to the problem of process analysis frequently enough and not being satisfied with the solutions I described below originally, I decided to write my own.

0 kommentar(er)

0 kommentar(er)